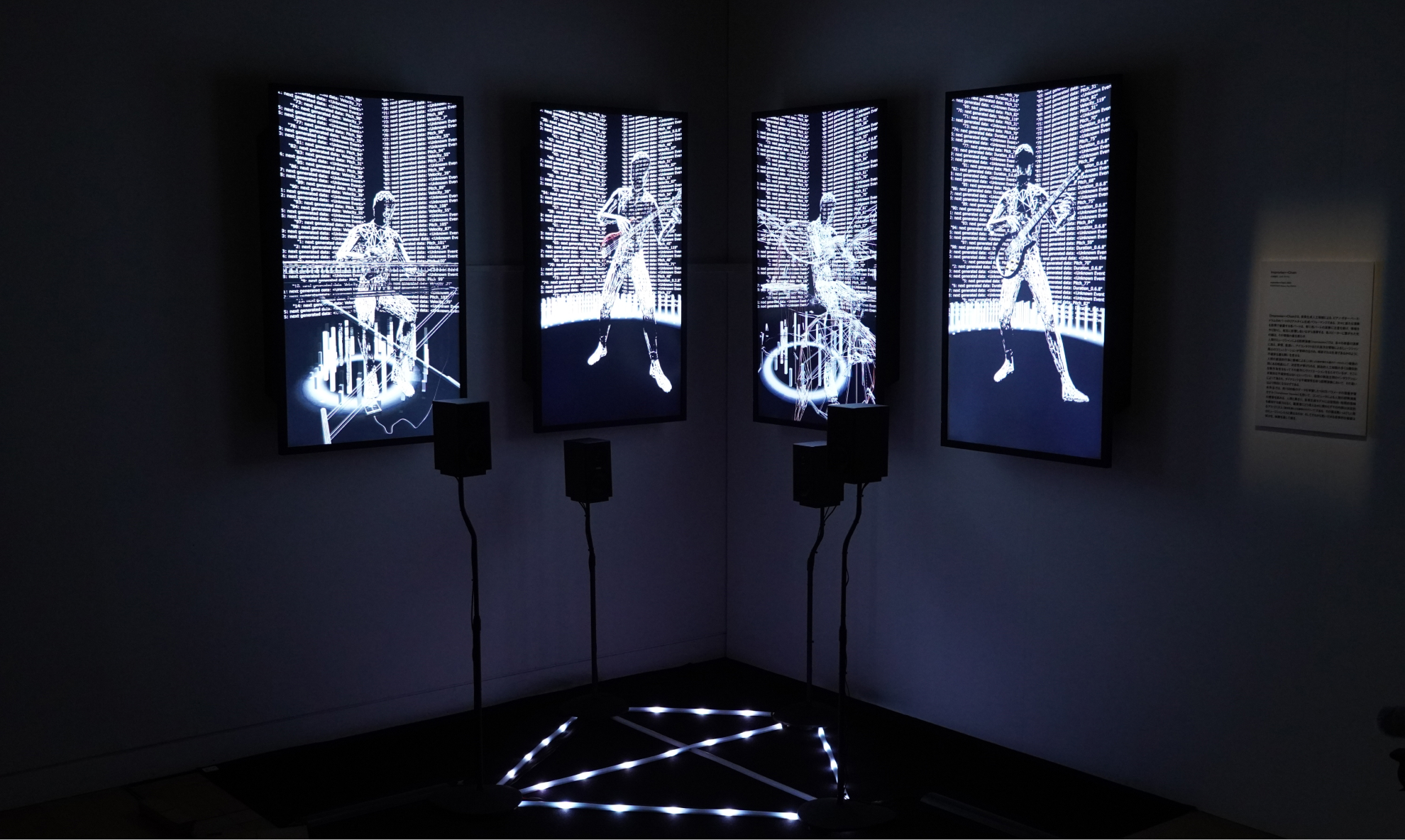

This work was exhibited on ‘MUSES EX MACHINA’ by TOKUI Nao Computational Creativity Lab, Keio University, November 14, 2022 -- January 15, 2023, at NTT Intercommunication Center [ICC], Tokyo.

https://www.ntticc.or.jp/en/exhibitions/2022/tokui-nao-computational-creativity-lab-keio-university/

Concept of this Work

This work is an audiovisual installation artwork of autonomous musical performance using artificial intelligence technology, designed to provide the audience with an experience exploring the differences between human and AI-based virtual musicians.

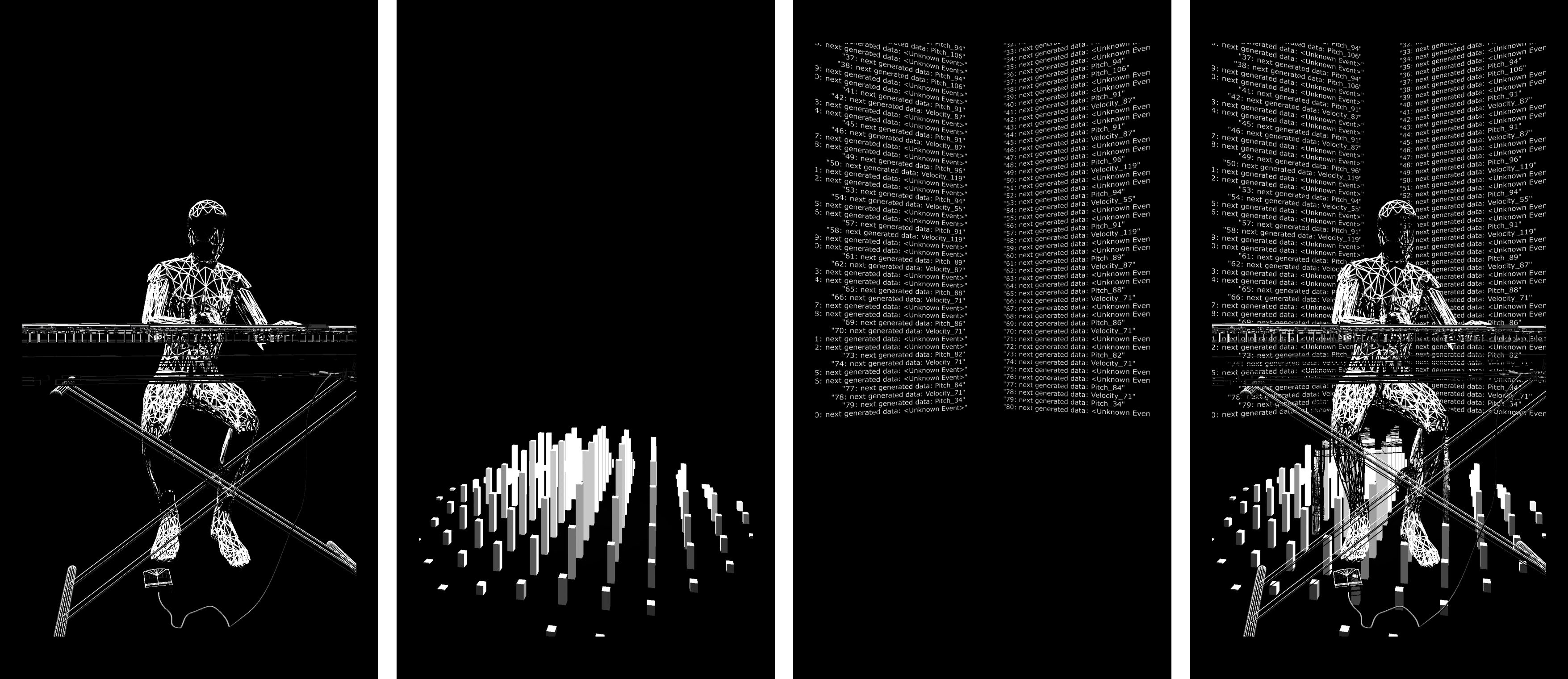

Using a transformer decoder, we developed a four-track (melody, bass, chords and accompaniment, and drums) symbolic music generation model, which generates each track in real-time to create an endless chain of phrases.

3D visuals and LED lights represent the Attention information calculated within the model. This work aims to highlight the differences for viewers to consider between humans and artificial intelligence in music jams by visualizing the only information virtual musicians can communicate with while humans interact in various modals during the performance.

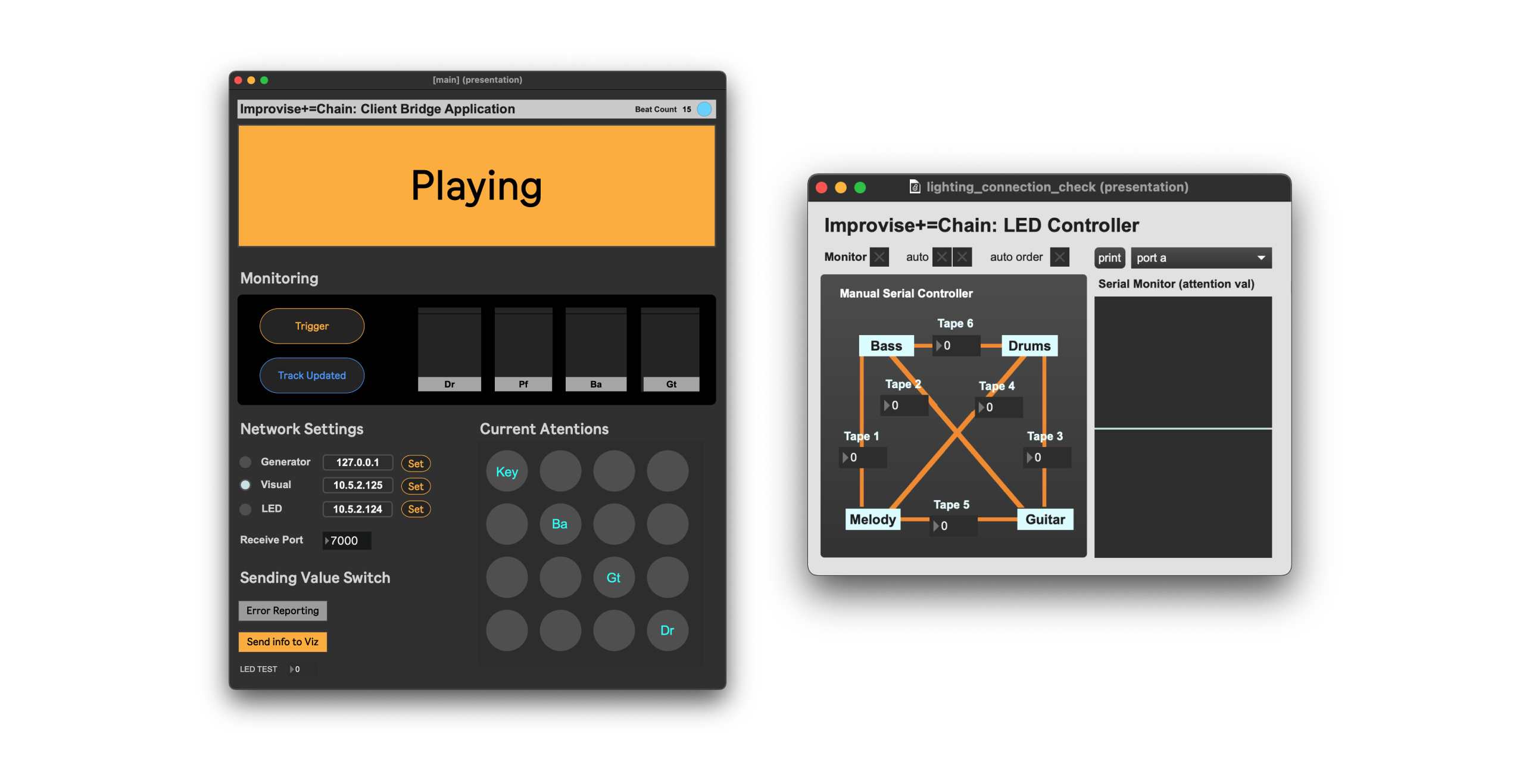

We developed user interface for installers using Max/MSP patcher that can be used as a monitor of value for LED control and attentions.

Research & Development: Atsuya Kobayashi

Concept Design: Atsuya Kobayashi

Visualization : Ryo Simon

Filming : Asuka Ishii, Kazufumi Shibuya